Credit: Mark Agnor | Dreamstime

Credit: Mark Agnor | Dreamstime

While the term ’spine-and-leaf‘ is well-understood by network designers and gaining traction in cabling circles, there is more behind the new architecture which is rapidly driving infrastructure decisions in large modern data centres.

When supporting hyperscale, big data, and cloud data centres, traditional expectations of achieving connectivity simply via patch cables, modest-capacity MPO/MTP trunks and patch panels evaporate. The challenge is to provision, add, and support high-density, bandwidth-greedy applications constantly. The number of connections is not simply the number of ports in a network but more a combination of transmission type, redundancy, subscription rates and switch configuration.

This article explores the contributing factors in high fibre count trunk selection and new options and skills available to deliver the connectivity needs of hyperscale deployments.

Market Drivers

Data centre build-outs continue to grow, driven by the increase in bandwidth demands and changes in network architectures. As a result, time-to-market needs for the data centre production environment continue to compress.

According to LightCounting research, 10GbE switching was recently dwarfed by 40G and 100G and beyond by the leading Internet content providers (ICPs) – Amazon, Facebook, Google and Microsoft (Figure 1).

Credit: Lightcounting

Credit: LightcountingThese ICP’s utilise various forms of massively scalable spine-leaf topologies. Options in this area are varied and may extend beyond simple spine-leaf (see Figure 2), to super spines and computer and infrastructure port counts of 100s of thousands in the leaf. Delivering this capacity as a physical fibre link is no longer an IT administration task.

While demand may come from different sources, such as support of external clients operating in a cloud-based services data center or internal lines of business for a traditional enterprise data centre, the need to offer services quickly and improve time-to-market is critical. To support this, the infrastructure must be able to be deployed efficiently, offering quick movement into production for large networks.

Availability is Key

Decisions in networking architecture have cause and effect implications in the structured cabling required for hyperscale data centres. Let’s look at a number of key decisions and the outcomes they present:

Network Speeds

The ubiquitous small form factor pluggable (SFP) transceiver with a duplex LC connector interface requires only 2 fibres to operate. It is available in many formats for both Ethernet and Fibre Channel, commonly at 10G and up to 40G. However, it cannot offer the efficiency and flexibility of aggregation and breakout of its more powerful cousin, the quad small form factor pluggable (QSFP).

The QSFP optic transceiver, available in both multimode and single-mode and operating at 40G, 100G and up to 400G, requires 8 fibres to carry parallel transmission, automatically dialling in a 4:1 increase in required fibre count.

Network Architecture

Redundancy beyond simple point to point is why the information flow is so reliable in modern hyperscale data centres. Well-designed spine-leaf environments are fully fault tolerant with balanced loads such that individual port failure at the server level is virtually transparent for the client.

A typical P2P architecture requiring 8 fibres in a QSFP setting escalates to 16 required fibres with spine leaf to 32 with a full mesh. The 4:1 increase in fibre expectation has now moved to 8:1 or 16:1 over our humble P2P SFP-dominated computer room.

Oversubscription

The inherent oversubscription rate in east-to-west traffic for spine-leaf is by necessity 1:1. Every leaf via the spine must have the ability to negotiate, without delay, to every other leaf.

Northbound through the spine to the point of delivery (PoD) generates rates often cited between 3:1 and 2:1. While this dilutes the fibre count requirement for core switching, the sheer number of leaves in a network introduces the need for fibre cable trunks in the 1000s of fibres.

Switch configuration

The mix of uplinks vs. breakout servers and cannibalisation of SFP ports for better oversubscription levels, drives the necessary number of fibres even further in some instances.

The connectivity between vast arrays of spine-to-leaf through to super spines and PoD is causing facility managers and infrastructure designers to consider High Fibre Count (HFC) Trunks and ancillary devices for administering complex mesh architectures.

Impact on Structured Cabling

Traditional structured cabling deployments in the data centre space were based on infrastructure designs using pre-terminated MTP assemblies ranging in fibre count from 12 to 144 fibres. The architecture examples cited earlier require a magnitude of order increase in fibre count.

As these types of deployments become necessary, it is important to consider the impact of application, environment, and installation methods required to provide High Fibre Count (HFC) connectivity.

Application Spaces

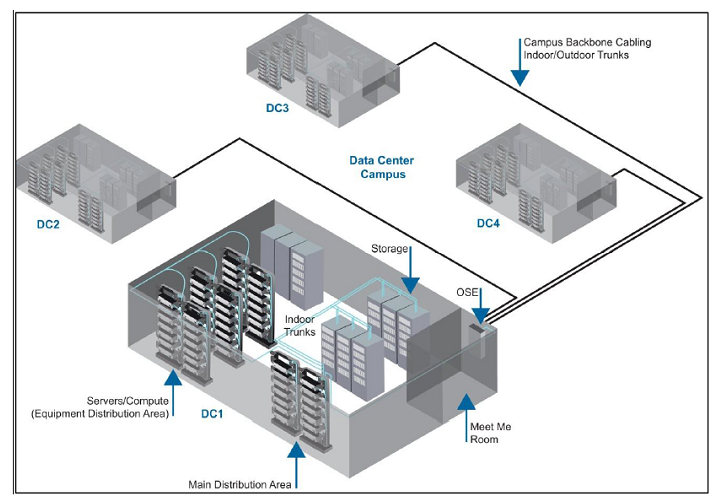

As the data centre environment evolves, the cabling and connectivity to support its needs must change and evolve as well. In Figure 3, the areas where connectivity is required both within a data centre as well as within a data centre campus are defined.

The use of multiple cables can fill available pathway space quickly, reducing the physical space available for future growth. An improved approach would include installation of a single high fibre count trunk, with 288, 576, 864 or even 1728 fibres. These HFC trunks go beyond the simple DIY nature of MTP plug and play installation.

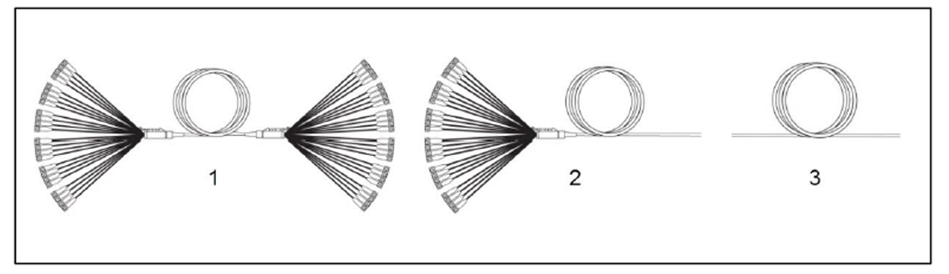

These MTP terminations can then either be broken out into individual ports with the use of MTP-LC modules, or used directly for MTP interfaces. With variations in infrastructure design, cabling environments and pathway types, MTP connectivity in the backbone cabling can be achieved via multiple methods, as shown in Figure 4:

1) Cables that are factory-terminated on both ends with MTP connectors (MTP Trunk Assemblies)

2) Cables that are factory terminated on one end with MTP connectors, and then field-terminated or spliced at the blunt cable end of a MTP Pigtail Trunk or Pre-stubbed housing

3) Bulk cables that are field-terminated on both ends with MTP Splice-On Connectors (SOCs), MTP pigtails or Pre-stubbed housings.

MTP-MTP Trunk Assemblies

MTP Trunk Assemblies (Figure 5) are used where the entire fibre count lands at a single location at each end of the link. For example, the Main Distribution Area (MDA) to the Horizontal Distribution Area (HDA) or, to the Equipment Distribution Area (EDA).

MTP Pigtail Trunks

The application for MTP Pigtail Trunks has two primary use cases. One application is for use in environments where the pathway will not allow a pre-terminated end with a pulling grip to fit through, such as a small conduit space (see Figure 6).

Another application is for use in environments where the high fibre count assembly is deployed to consolidate inter-building fibre connectivity on a campus, and then broken out to multiple lower fibre count assemblies at distribution areas within the building (see Figure 6). Additionally, a deployment using pigtail trunks can be useful when the exact pathway or route is not easily measured prior to ordering the assembly.

MTP Pigtail Trunks with MTP Splice-On Connectors (SOC)

Field terminating a high fibre count MTP pigtail trunk directly with MTP connectors is recommended when the assembly lands at a single cabinet or location (as shown in Figure 7).

Termination of high fibre count ribbon cable solutions can be accomplished with the MTP field termination or splicing options discussed above. In addition to the recommended MTP-based design solutions, alternate duplex termination methods that can be utilised include:

- Splicing to pre-stubbed housings (central splice location)

- Splicing to EDGE splice cassettes (splice at each housing)

The Bottom Line

As you can see, each solution offers advantages dependent on the application environment, design requirements, and installation needs. Key needs of the data centre environment to consider when evaluating these solutions include:

- Speed of deployment

- Product quality and performance

- Migration path to 400G & future-ready technology support with Parallel Optics

Additional considerations include fibre count, pathway type and ability to plan for or measure cable routes.

These areas are typically a factor in data centre campus environments where cabling is required to connect multiple data centre buildings.