Credit: Nvidia

Credit: Nvidia

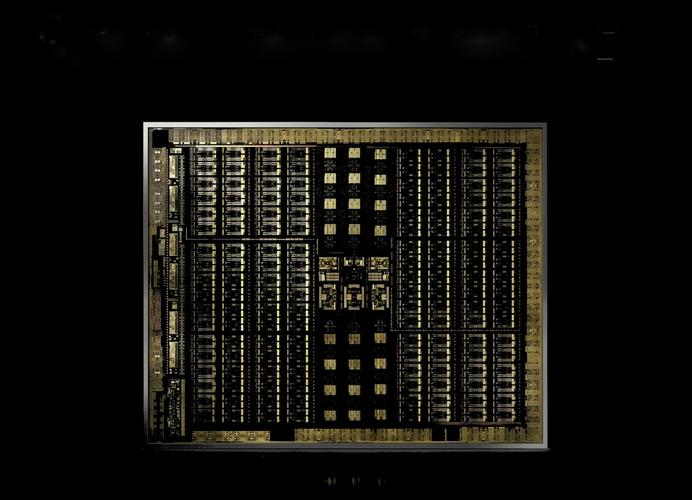

Inside Nvidia’s Turing GPU: RT cores

Let’s start with the RT cores. Nvidia never said so explicitly, but “RT” likely stands for “ray tracing,” as this hardware is included in Turing GPUs solely to accelerate ray tracing performance. Ray tracing’s the marquee feature of the GeForce RTX 20-series—enough so that these new GPUs drop the longstanding “GTX” name.

Ray tracing simulates the way light works in the real world, casting out rays to interact with objects in a scene, rather than “faking it” like rasterization, the rendering method traditionally used in games. It’s what makes CGI movies so beautiful. But ray tracing is incredibly computationally expensive, which is why it’s been considered gaming’s exalted and unattainable Holy Grail for decades now. Even Nvidia’s RTX implementation, built on top of Microsoft’s forthcoming DirectX Raytracing API, doesn’t provide “true” ray tracing. Instead, it’s a hybrid rendering model that leans on traditional rasterization techniques for most of the scene, then turns to ray tracing for light, shadow, and reflections. It’s visually impressive even in this form, though.

Nvidia

NvidiaNvidia’s RTX ray tracing implementation is built around bounding volume hierarchy (BVH) traversal, using a technique that tests whether an object was hit by a ray of light by breaking it into a series of large boxes rather than scanning each of its gajillion small triangles. Each box quickly reports whether it was hit by the ray; if one was, it’s split into a smaller set of boxes, and the one in there that’s hit gets split into another series of small boxes, and so on. Finally, when you get down to the smallest set of boxes, it’s split out into the object’s individual triangles. When the final triangle’s hit, it provides location and color information.

Testing ray tracing via BVH groupings lets games go from scanning millions of potential triangle targets down to mere dozens, according to Nvidia’s Jonah Alben. But performing BVH with traditional shader hardware bogs doesn’t work well, because it takes several thousand software instruction slots per ray to work through all the boxes and find those final triangles. The shaders sit around and wait while all those boxes and triangles get discovered. Here’s a diagram of how it works with the GTX 10-series’ Pascal GPU.

Nvidia

NvidiaTuring behaves differently. The shaders launch a ray probe, and instead of having to process the thousands of instructions via software emulation, it boots the testing over to the dedicated RT core hardware, leaving the shaders free to perform other tasks.

RT cores consist of two specialized parts. First, box intersection evaluators plow through all the boxes created during BVH traversal to find the final set of triangles. After that, the workload’s handed off to a second unit to perform ray-triangle intersection tests. The RT cores pass the final information back to the shaders for image processing.

Nvidia

NvidiaSplitting the computationally expensive ray tracing task up between shaders and dedicated RT cores works wonders for performance. Nvidia says that while the GTX 1080 Ti can generate 1.1 Giga Rays per second, the Turing GPU in the GeForce RTX 2080 Ti can run ray tracing at a clip of 10-plus Giga Rays. It’s the dedicated hardware that makes real-time ray tracing—a feat the industry expected to be years away—possible with GeForce RTX today, albeit in hybrid form.

Nvidia

NvidiaThat’s not the end of the special sauce, though. Ray tracing and other Nvidia RTX features also wouldn’t be possible if Turing lacked tensor cores.

Inside Nvidia’s Turing GPU: Tensor cores and NGX

“Tensor Cores are specialized execution units designed specifically for performing the tensor / matrix operations that are the core compute function used in Deep Learning,” Nvidia says. They’re used for machine learning tasks.

Nvidia

NvidiaThe GeForce RTX 2080 and 2080 Ti are the first consumer graphics cards to include tensor cores, which originally appeared in Nvidia’s pricey Volta data center GPUs. Unlike Volta tensor cores, however, Turing’s include “new INT8 and INT4 precision modes for inferencing workloads that can tolerate quantization and don’t require FP16 precision.” FP16 is crucial for gaming uses, though, and Turing supports it to the tune of 114 teraflops.

Nvidia

NvidiaThat’s a lot of numbers and specialized terms. What do Turing’s tensor cores actually do? Well, to start, there’s a reason they mop things up at the end of a frame. Tensor cores basically leverage AI to clean up or alter the images created by the CUDA and RT cores. Crucially, they help “denoise” ray tracing. Real-time ray tracing isn’t new; it’s been around for ages but didn’t perform well. Ray traced scenes often appear visually grainy due to the limited number of rays you can cast without choking performance, as you can see in this ray traced Quake 2 video (which was not created for or tested on Nvidia’s RTX). Turing’s tensor cores use machine learning to help identify and eliminate that graininess.

That’s not the only application though. Nvidia’s new NGX (“neural graphics framework”) suite leverages tensor cores in multiple ways, and some are pretty damned interesting.

Nvidia

Nvidia“NGX utilizes deep neural networks (DNNs) and set of ‘Neural Services’ to perform AI-based functions that accelerate and enhance graphics, rendering, and other client-side applications,” Nvidia says. Basically, the company uses its Saturn V supercomputer to train AI on how best to optimize a game around an NGX feature, and then pushes that pre-trained information out to RTX 2080 and 2080 Ti owners via the GeForce Experience app. Once installed, the graphics card’s tensor cores use that AI training model in-game, performing the same tasks on your local hardware.

Some of the first NGX features include AI-boosted slow-mo video, and an “AI Inpainting” tool that lets you “remove existing content from an image and use an NGX AI algorithm to replace the removed content with a realistic computer-generated alternative. For example, Inpainting could be used to automatically remove power lines from a landscape image, replacing them seamlessly with the existing sky background.” Sounds nifty!

Nvidia

NvidiaOther NGX features prove more relevant for gamers. AI Super Rez increases the effective resolution of an image or video by 2x, 4x, or 8x by training the NGX AI on a “ground truth” image as near to perfect as possible. It can then upgrade even low-resolution images by using that “ground truth” information to interpret what’s being shown, and intelligently adding pixels where they’re need to improve the resolution. Nvidia says its video super-resolution network can upscale 1080p to 4K in real time at 30 frames per second.

(I suggest clicking the images above and below to examine them in finer detail.)

Nvidia

NvidiaBut the most intriguing NGX feature must be Deep Learning Super Sampling (DLSS), an antialiasing method that provides much sharper results than traditional Temporal Anti-Aliasing (TAA) at a much lower performance cost. Epic’s Infiltrator demo runs at just under 40 frames per second on the GTX 1080 Ti at 4K resolution with TAA enabled; it runs just shy of 60 fps on the RTX 2080 Ti using the same settings, or at 80 fps with DLSS enabled. Hot damn!

Nvidia’s Turing architecture whitepaper explains how it works:

“The key to this result is the training process for DLSS, where it gets the opportunity to learn how to produce the desired output based on large numbers of super-high-quality examples. To train the network, we collect thousands of “ground truth” reference images rendered with the gold standard method for perfect image quality, 64x supersampling (64xSS). 64x supersampling means that instead of shading each pixel once, we shade at 64 different offsets within the pixel, and then combine the outputs, producing a resulting image with ideal detail and anti-aliasing quality. We also capture matching raw input images rendered normally. Next, we start training the DLSS network to match the 64xSS output frames, by going through each input, asking DLSS to produce an output, measuring the difference between its output and the 64xSS target, and adjusting the weights in the network based on the differences, through a process called back propagation. After many iterations, DLSS learns on its own to produce results that closely approximate the quality of 64xSS, while also learning to avoid the problems with blurring, disocclusion, and transparency that affect classical approaches like TAA.

In addition to the DLSS capability described above, which is the standard DLSS mode, we provide a second mode, called DLSS 2X. In this case, DLSS input is rendered at the final target resolution and then combined by a larger DLSS network to produce an output image that approaches the level of the 64x super sample rendering - a result that would be impossible to achieve in real time by any traditional means.”

More than a dozen games are already lined up for DLSS support. Here’s a full list of every game that supports ray tracing or DLSS.

Nvidia

NvidiaDevelopers need to actively support NGX features in their games and applications. Nvidia offers an NGX API and SDK, and it doesn’t charge developers to train NGX features. Tony Tamasi, Nvidia’s senior VP of content and technology, says the company has successfully integrated NGX features into existing games in under a day.

Inside Nvidia’s Turing GPU: Display and video

Finally, lets wrap things up with changes to Turing’s display and video handling. The GeForce RTX 2070, 2080, and 2080 Ti all support VirtualLink, a new USB-C alternate-mode standard that contains all the video, audio, and data connections necessary to power a virtual reality headset. Turing also supports low-latency native HDR output with tone mapping, and 8K at up to 60Hz on dual displays. The DisplayPorts on GeForce RTX graphics cards are also DisplayPort 1.4a ready.

Turing also includes improvements in video encoding and decoding, which should be of particular interest to streamers and other video enthusiasts. Check out the details in the screenshot below.

Nvidia

NvidiaThat wraps up our deep-dive into the Turing GPU, the cutting-edge monster at the heart of the GeForce RTX 2080 and 2080 Ti. Check out our roundup of the custom GeForce RTX graphics cards you can preorder right now ahead of their launch on September 20.