Apple's child abuse photo scanning: What it is and why people are worried

- 09 August, 2021 21:00

Apple announced last week that it will begin scanning all photos uploaded to iCloud for potential child sexual abuse material (CSAM). It's come under a great deal of scrutiny and generated some outrage, so here's what you need to know about the new technology before it rolls out later this year.

What is are the technologies Apple is rolling out?

Apple will be rolling out new anti-CSAM features in three areas: Messages, iCloud Photos, and Siri and Search. Here's how each of them will be implemented, according to Apple:

Messages: The Messages app will use on-device machine learning to warn children and parents about sensitive content.

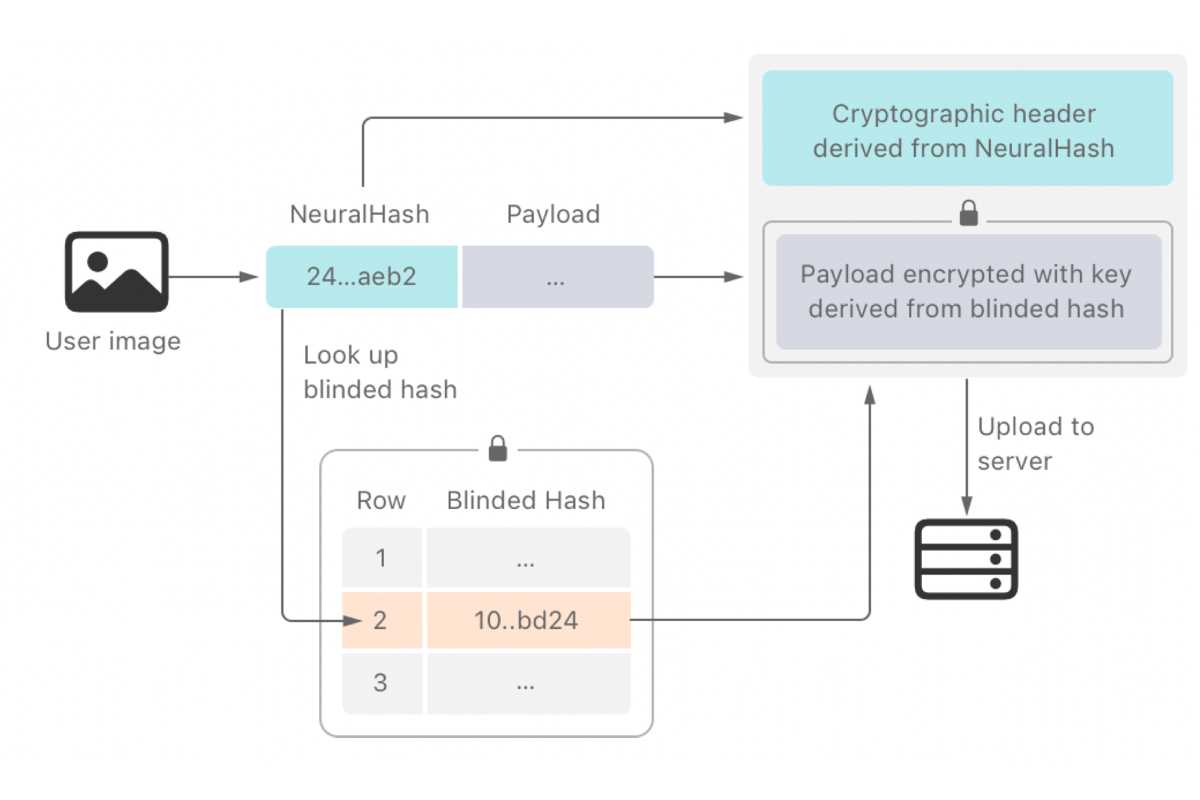

iCloud Photos: Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes.

Siri and Search: Siri and Search will provide additional resources to help children and parents stay safe online and get help with unsafe situations.

When will the new technologies arrive?

Apple says the Messages and Siri and Search features will arrive in iOS 15, iPadOS 15, and macOS Monterey. To be clear, Apple doesn't say the technologies will arrive when iOS 15 lands, so it could come in a follow-up update. The iCloud Photos scamming doesn't have a specific date for release but will presumably arrive later this year as well.

The new CSAM detection tools will arrive with the new OSes later this year. Image: Apple

Does this mean Apple will be able to see my photos?

Not exactly. Here's how Apple explains the technology: Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by the National Center for Missing and Exploited Children (NCMEC) and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users' devices.

But Apple is scanning photos on my device, right?

It is. However, Apple says the system does not work for users who have iCloud Photos disabled.

What happens if the system detects CSAM images?

For one, it shouldn't. Since the system only works with CSAM image hashes provided by NCMEC, it will only report photos that are known CSAM in iCloud Photos. If it does detect CSAM, Apple will then conduct a human review before deciding whether to make a report to NCMEC. Apple says there is no automated reporting to law enforcement, though it will report any instances to the appropriate authorities.

Can I opt out of the iCloud Photos CSAM scanning?

No, but you can disable iCloud Photos to prevent Apple from scanning images.

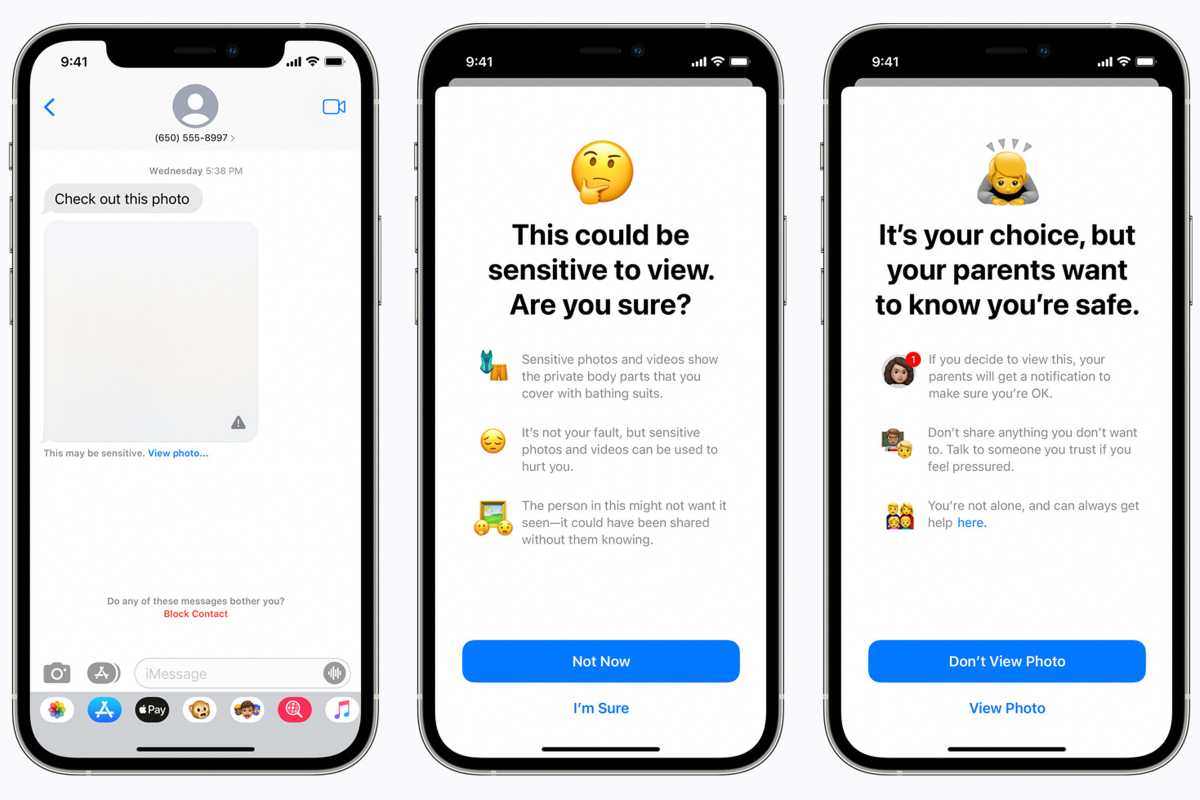

Image: Apple

Is Apple scanning all of my photos in Messages too?

Not exactly. Apple's safety measures in Messages are designed to protect children and are only available for child accounts set up as families in iCloud.

So how does it work?

Communication safety in Messages is different than CSAM scanning. Rather than using image hashes to compare against known images, it analyzes images on the device for sexually explicit content. Image are not shared with Apple or any other agency, including NCMEC.

Can parents opt-out?

Parents need to specifically opt in to use the new Messages image scanning.

Will iMessages still end-to-end encrypted?

Apple says communication safety in Messages doesn't change the privacy features bakes into messages, and Apple never gains access to communications. Furthermore, none of the communications, image evaluation, interventions, or notifications are available to Apple.

What happens if a sexually explicit image is discovered?

When a child aged 13-17 sends or receives sexually explicit images, the photo will be blurred and the child will be warned, presented with resources, and reassured it is okay if they do not want to view or send the photo. For accounts of children age 12 and under, parents can set up parental notifications which will be sent if the child confirms and sends or views an image that has been determined to be sexually explicit.

What's new in Siri and Search?

Apple is enhancing Siri and Search to help people find resources for reporting CSAM and expanding guidance in Siri and Search by providing additional resources to help children and parents stay safe online and get help with unsafe situations. Apple is also updating Sir and Search to intervene when users perform searches for queries related to CSAM. Apple says the interventions will include explaining to users that interest in this topic is harmful and problematic and provide resources from partners to get help with this issue.

Can the CSAM system be used to scan for other image types?

Not presently. Apple says the system is only designed to scan for CSAM images. However, Apple could theoretically tweak the parameters to look for images related to other things, such as LGBTQ+ content.

What if a government forces Apple to scan for other images?

Apple says it will refuse such demands.

Image: Apple

Do other companies scan for CSAM images?

Yes, most cloud services, including Dropbox, Google, and Microsoft, as well as Facebook also have systems in place to detect CSAM images.

So why are people upset?

While most people agree that Apple's system is appropriately limited in scope, experts, watchdogs, and privacy advocates are concerned about the potential for abuse. For example, Edward Snowden, who exposed global surveillance programs by the NSA and is living in exile, tweeted No matter how well-intentioned, Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow. Additionally, Matthew Green, a cryptography professor at Johns Hopkins, explained the potential for misuse with the system Apple is using.

Credit: IDG

Credit: IDG People are also concerned that Apple is sacrificing the privacy built into the iPhone by using the device to scan for CSAM images. While many other services scan for CSAM images, Apple's system is unique in that it uses on-device matching rather than images uploaded to the cloud.

Could Apple be blocked from implementing its CSAM detection system?

It's hard to say, but it's likely that there will be legal battles both before and after the new technologies are implemented.